Question

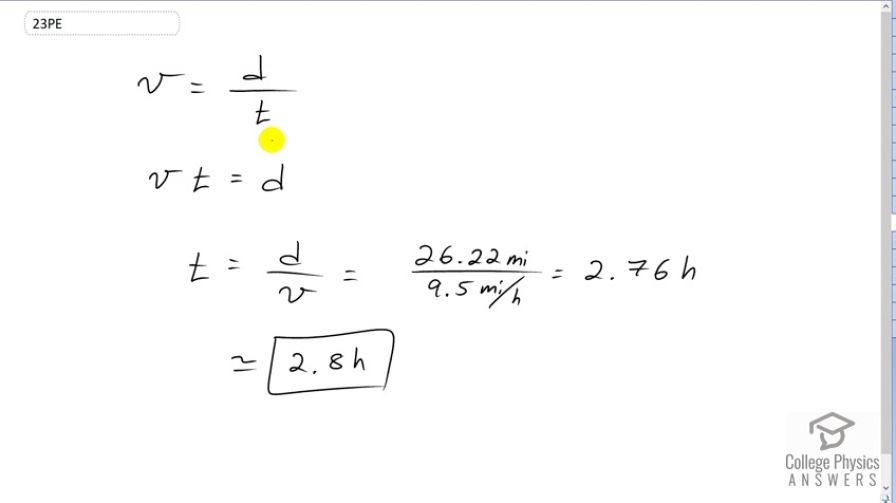

If a marathon runner averages 9.5 mi/h, how long does it take him or her to run a 26.22 mi marathon?

Final Answer

Solution video

OpenStax College Physics, Chapter 1, Problem 23 (Problems & Exercises)

vote with a rating of

votes with an average rating of

.

Calculator Screenshots

Video Transcript

This is College Physics Answers with Shaun Dychko Let's begin with the speed formula, which is that speed is the distance divided by time, and then we’ll multiply both sides by time because that's what we want to solve for. So, let's get it out of the denominator and then it cancels in the right side, leaving us with speed times time in the left equals distance on the right. And then, we’ll divide both sides by the speed cancelling it on the left, isolating t on its own there. And then, that equals distance divided by speed. And so, we have 26.22 miles divided by 9.5 miles per hour, which is 2.76 hours. And our time unit, of course, will be the same as the time units in our speed. Now, this is not our final answer though because we're doing a calculation where we have some division, and there is a number with four significant figures on top and a number with two significant figures on the bottom. And when doing division or multiplying, you have as many significant figures in your answer as the number with the least significant figures in the question. And that would be two significant figures in that denominator there. And so, we round this to two significant figures for a final answer of 2.8 hours.